Fastest Mixing Markov Chain

## Warning in desc$Date: partial match of 'Date' to 'Date/Publication'Introduction

This example is derived from the results in Boyd, Diaconis, and Xiao (2004), section 2. Let G=(V,E) be a connected graph with vertices V={1,…,n} and edges E⊆V×V. Assume that (i,i)∈E for all i=1,…,n, and (i,j)∈E implies (j,i)∈E. Under these conditions, a discrete-time Markov chain on V will have the uniform distribution as one of its equilibrium distributions. We are interested in finding the Markov chain, constructing the transition probability matrix P∈Rn×n+, that minimizes its asymptotic convergence rate to the uniform distribution. This is an important problem in Markov chain Monte Carlo (MCMC) simulations, as it directly affects the sampling efficiency of an algorithm.

The asymptotic rate of convergence is determined by the second largest eigenvalue of P, which in our case is μ(P):=λmax(P−1n11T) where λmax(A) denotes the maximum eigenvalue of A. As μ(P) decreases, the mixing rate increases and the Markov chain converges faster to equilibrium. Thus, our optimization problem is

minimizePλmax(P−1n11T)subject toP≥0,P1=1,P=PTPij=0,(i,j)∉E.

The element Pij of our transition matrix is the probability of moving from state i to state j. Our assumptions imply that P is nonnegative, symmetric, and doubly stochastic. The last constraint ensures transitions do not occur between unconnected vertices.

The function λmax is convex, so this problem is solvable

in CVXR. For instance, the code for the Markov chain in Figure 2

below (the triangle plus one edge) is

P <- Variable(n,n)

ones <- matrix(1, nrow = n, ncol = 1)

obj <- Minimize(lambda_max(P - 1/n))

constr1 <- list(P >= 0, P %*% ones == ones, P == t(P))

constr2 <- list(P[1,3] == 0, P[1,4] == 0)

prob <- Problem(obj, c(constr1, constr2))

result <- solve(prob)where we have set n=4. We could also have specified P1=1 with sum_entries(P,1) == 1, which uses the

sum_entries atom to represent the row sums.

Example

In order to reproduce some of the examples from Boyd, Diaconis, and Xiao (2004), we create functions to build up the graph, solve the optimization problem and finally display the chain graphically.

## Boyd, Diaconis, and Xiao. SIAM Rev. 46 (2004) pgs. 667-689 at pg. 672

## Form the complementary graph

antiadjacency <- function(g) {

n <- max(as.numeric(names(g))) ## Assumes names are integers starting from 1

a <- lapply(1:n, function(i) c())

names(a) <- 1:n

for(x in names(g)) {

for(y in 1:n) {

if(!(y %in% g[[x]]))

a[[x]] <- c(a[[x]], y)

}

}

a

}

## Fastest mixing Markov chain on graph g

FMMC <- function(g, verbose = FALSE) {

a <- antiadjacency(g)

n <- length(names(a))

P <- Variable(n, n)

o <- rep(1, n)

objective <- Minimize(norm(P - 1.0/n, "2"))

constraints <- list(P %*% o == o, t(P) == P, P >= 0)

for(i in names(a)) {

for(j in a[[i]]) { ## (i-j) is a not-edge of g!

idx <- as.numeric(i)

if(idx != j)

constraints <- c(constraints, P[idx,j] == 0)

}

}

prob <- Problem(objective, constraints)

result <- solve(prob)

if(verbose)

cat("Status: ", result$status, ", Optimal Value = ", result$value, ", Solver = ", result$solver)

list(status = result$status, value = result$value, P = result$getValue(P))

}

disp_result <- function(states, P, tolerance = 1e-3) {

if(!("markovchain" %in% rownames(installed.packages()))) {

rownames(P) <- states

colnames(P) <- states

print(P)

} else {

P[P < tolerance] <- 0

P <- P/apply(P, 1, sum) ## Normalize so rows sum to exactly 1

mc <- new("markovchain", states = states, transitionMatrix = P)

plot(mc)

}

}Results

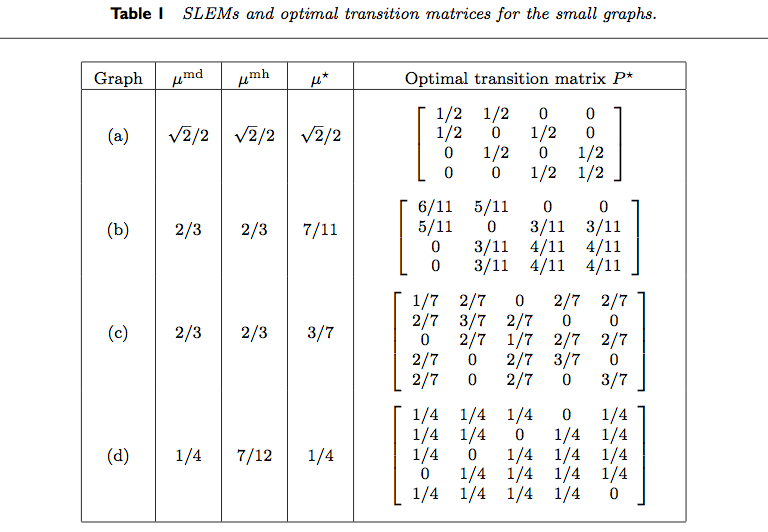

Table 1 from Boyd, Diaconis, and Xiao (2004) is reproduced below.

We reproduce the results for various rows of the table.

g <- list("1" = 2, "2" = c(1,3), "3" = c(2,4), "4" = 3)

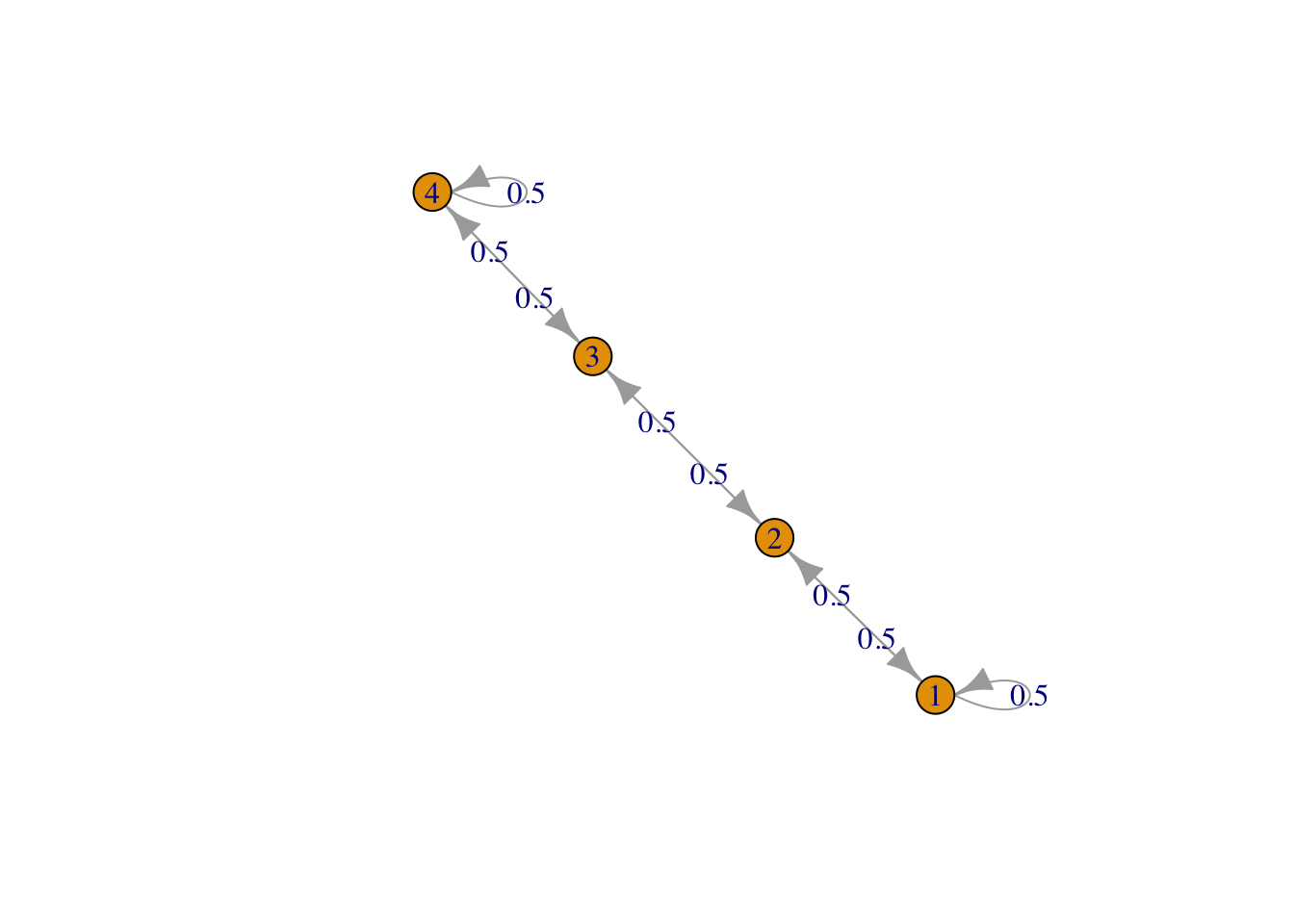

result1 <- FMMC(g, verbose = TRUE)## Status: optimal , Optimal Value = 0.7070928 , Solver = SCSdisp_result(names(g), result1$P)

Figure 1: Row 1, line graph

g <- list("1" = 2, "2" = c(1,3,4), "3" = c(2,4), "4" = c(2,3))

result2 <- FMMC(g, verbose = TRUE)## Status: optimal , Optimal Value = 0.6363443 , Solver = SCSdisp_result(names(g), result2$P)

Figure 2: Row 2, triangle plus one edge

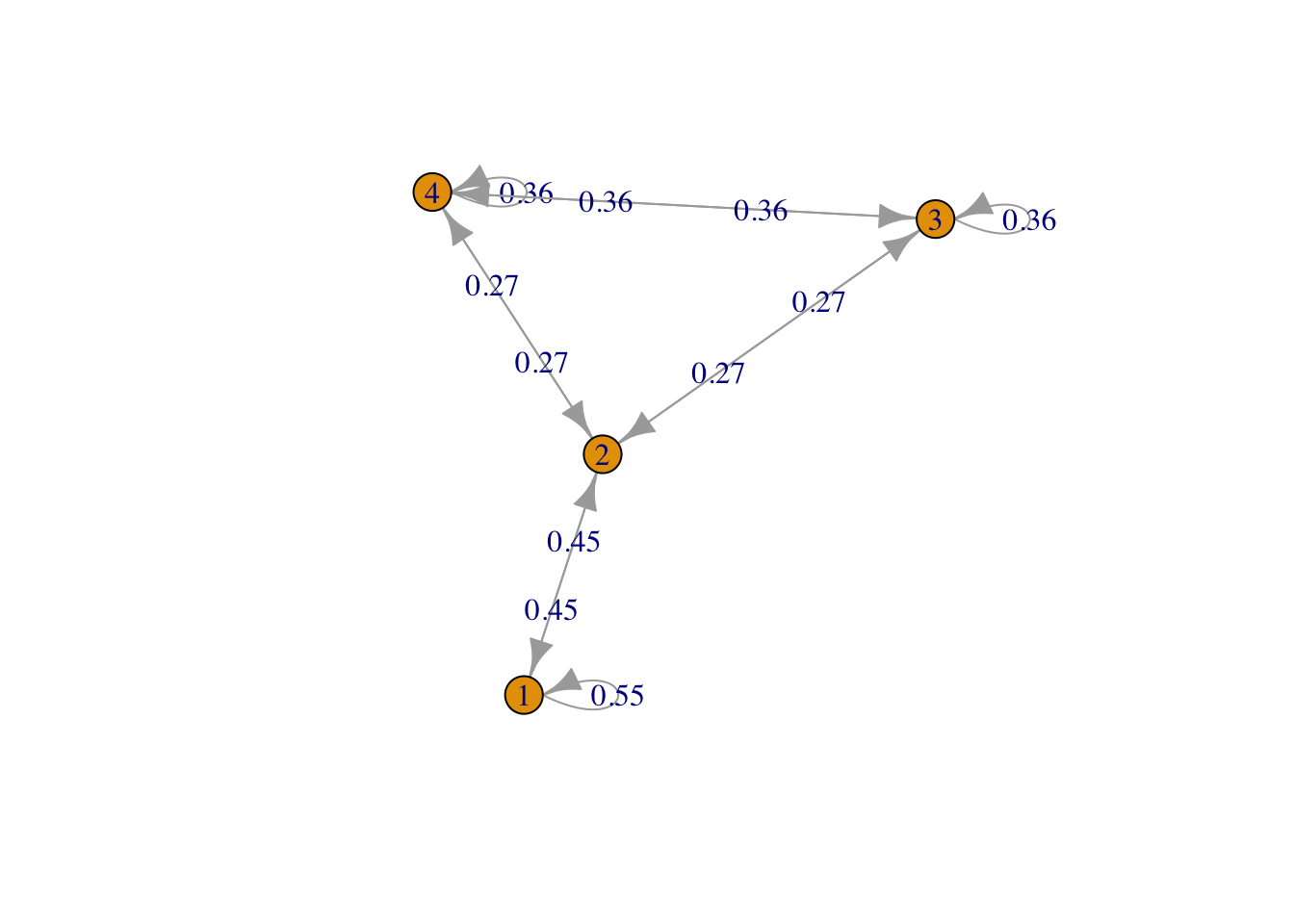

g <- list("1" = c(2,4,5), "2" = c(1,3), "3" = c(2,4,5), "4" = c(1,3), "5" = c(1,3))

result3 <- FMMC(g, verbose = TRUE)## Status: optimal , Optimal Value = 0.4285612 , Solver = SCSdisp_result(names(g), result3$P)

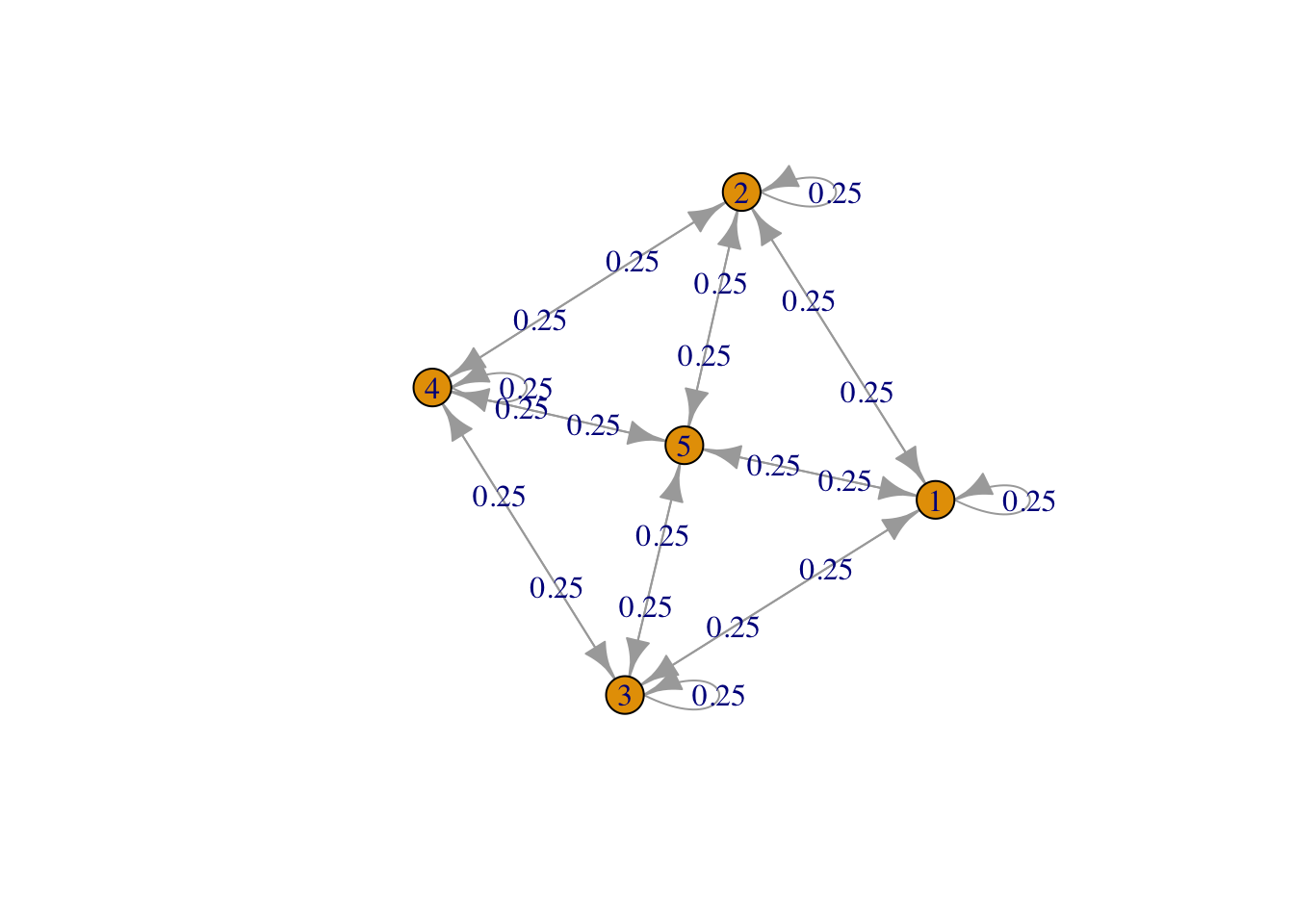

Figure 3: Bipartite 2 plus 3

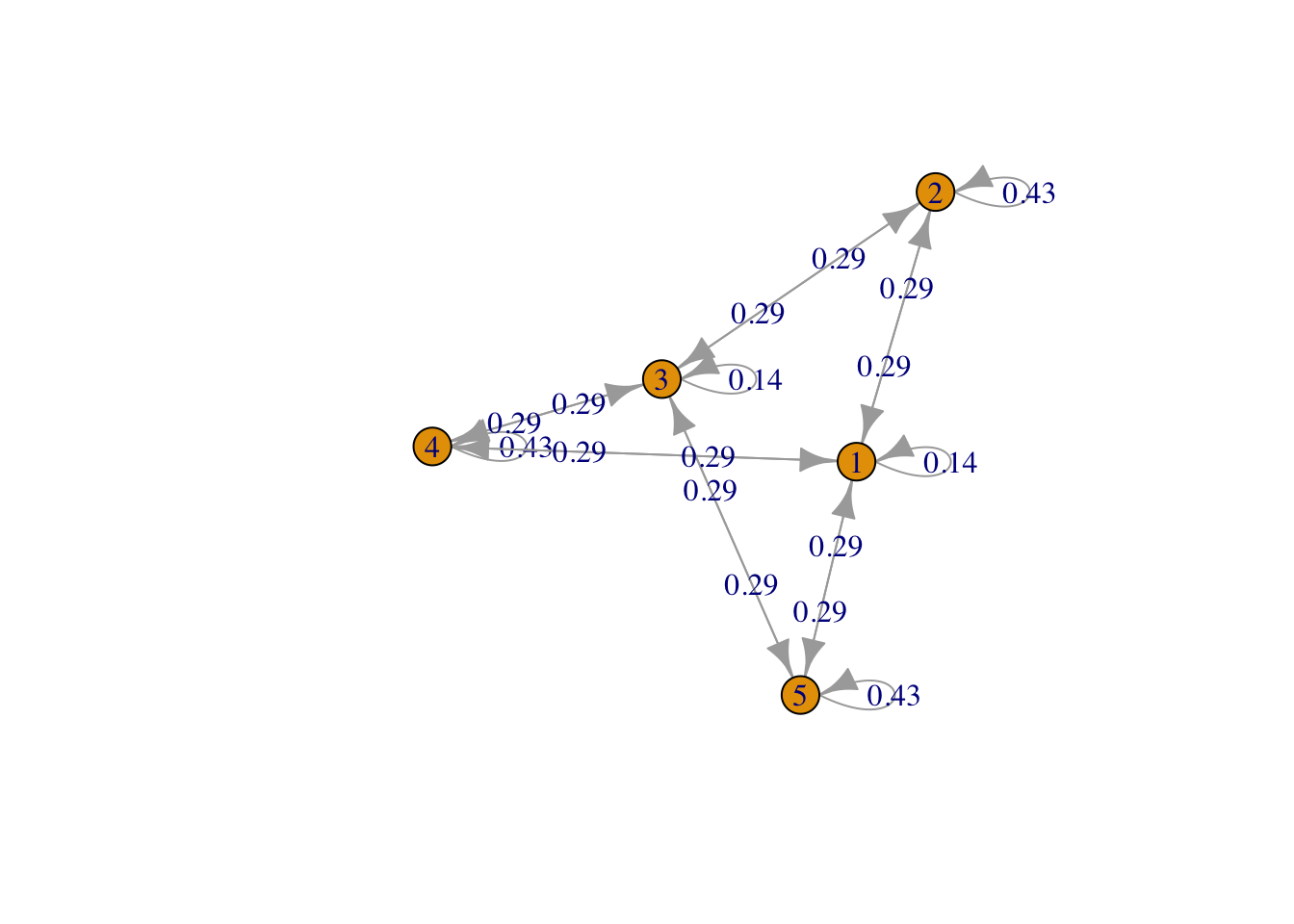

g <- list("1" = c(2,3,5), "2" = c(1,4,5), "3" = c(1,4,5), "4" = c(2,3,5), "5" = c(1,2,3,4,5))

result4 <- FMMC(g, verbose = TRUE)## Status: optimal , Optimal Value = 0.2500332 , Solver = SCSdisp_result(names(g), result4$P)

Figure 4: Square plus central point

## Testthat Results: No output is good## Error: `result2` not equal to fmix$result2.

## Component "P": Mean relative difference: 0.02312084Extensions

It is easy to extend this example to other Markov chains. To change

the number of vertices, we would simply modify n, and to add or

remove edges, we need only alter the constraints in constr2. For

instance, the bipartite chain in Figure 3 is produced by

setting n=5 and

constr2 <- list(P[1,3] == 0, P[2,4] == 0, P[2,5] == 0, P[4,5] == 0)Session Info

sessionInfo()## R version 4.4.2 (2024-10-31)

## Platform: x86_64-apple-darwin20

## Running under: macOS Sequoia 15.1

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.4-x86_64/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.4-x86_64/Resources/lib/libRlapack.dylib; LAPACK version 3.12.0

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## time zone: America/Los_Angeles

## tzcode source: internal

##

## attached base packages:

## [1] stats graphics grDevices datasets utils methods base

##

## other attached packages:

## [1] markovchain_0.9.5 CVXR_1.0-15 testthat_3.2.1.1 here_1.0.1

##

## loaded via a namespace (and not attached):

## [1] gmp_0.7-5 utf8_1.2.4 sass_0.4.9 clarabel_0.9.0.1

## [5] slam_0.1-54 blogdown_1.19 lattice_0.22-6 digest_0.6.37

## [9] magrittr_2.0.3 evaluate_1.0.1 grid_4.4.2 bookdown_0.41

## [13] pkgload_1.4.0 fastmap_1.2.0 rprojroot_2.0.4 jsonlite_1.8.9

## [17] Matrix_1.7-1 brio_1.1.5 scs_3.2.4 fansi_1.0.6

## [21] Rmosek_10.2.0 codetools_0.2-20 jquerylib_0.1.4 cli_3.6.3

## [25] Rmpfr_0.9-5 rlang_1.1.4 expm_1.0-0 Rglpk_0.6-5.1

## [29] bit64_4.5.2 cachem_1.1.0 yaml_2.3.10 tools_4.4.2

## [33] parallel_4.4.2 Rcplex_0.3-6 rcbc_0.1.0.9001 gurobi_11.0-0

## [37] assertthat_0.2.1 vctrs_0.6.5 R6_2.5.1 stats4_4.4.2

## [41] lifecycle_1.0.4 bit_4.5.0 desc_1.4.3 pkgconfig_2.0.3

## [45] cccp_0.3-1 pillar_1.9.0 RcppParallel_5.1.9 bslib_0.8.0

## [49] glue_1.8.0 Rcpp_1.0.13-1 highr_0.11 xfun_0.49

## [53] knitr_1.48 htmltools_0.5.8.1 igraph_2.1.1 rmarkdown_2.29

## [57] compiler_4.4.2